Designing a Storage Area Network for the Department of Engineering Science

Objective

The objective of this project was to use mixed-integer programming (MIP) models to automatically design a minimum-cost storage area network (SAN). We designed this SAN as a possible alternative to the storage system that was used (up until 2007) by the Department of Engineering Science (DES) in The University of Auckland.Problem Description

When we started this project in 2006 the DES storage system consisted of:- 3 servers, two used as domain controllers (Server 1 for staff and Server 2 for students, respectively), and one server (Server 3) used purely for data storage;

- Server 1 and Server 2 both contain two "plug-and-play" hard drives. The drives have 300 GB of memory and their disks run at 10000 rpm;

- Both Server 1 and Server 2 mirror their data, i.e., both hard drives in a server contains a copy of the data accessed by that server;

- A new storage device (with 15 TB of storage) was to be added to the storage system.

Storage Devices

To replicate the functionality of the current DES storage system we need to have two storage devices and one backup storage device. The two storage devices must model the behaviour of the current embedded storage devices, so they will be disk arrays with two 300 GB, 10000 rpm disks. The backup storage device will be identical to the storage device being added to the current storage system.Servers

The new storage system will be connected to the original servers (Server 1, Server 2, and Server 3 respectively) and also connected to two new servers (New Server 1 and New Server 2). The new servers will initially be used for running iSCSI software, iSCSI Target, and testing the SAN before it is connected to the DES servers. Once the decision to use the SAN has been made the new servers will be used predominantly for running iSCSI Target.Reliability

Each server and storage device must have two connections to the SAN fabric to allow for 2(+) disjoint paths between any server/storage device pair.Switches, Hubs and Links

Initially we used a fully-connected single-edge Core-Edge topology for our SAN design:- Fully-Connected means that all the server ports and all the storage device ports are connected to the SAN;

- Single-Edge means that both server ports and storage device ports connect to a single layer of edge switches;

- Core-Edge is a topology using edge switches to connect to hosts (e.g., servers, client machines, etc) and devices (e.g., storage devices such a disks, disk arrays, etc) and core switches to connect the edge switches to each other. All links go between the hosts/devices and the edge switches or the edge switches and the core switches. To preserve network symmetry, all edge switches are the same switch type and all core switches are the same switch type (although the core switch type may be different from the edge switch type).

| Switch Types | Cost ($) | Ports |

|---|---|---|

| Cameo | 73.83 | 5 |

| DLink | 89 | 8 |

| CNet | 275 | 16 |

| Linksys | 366.35 | 24 |

Approach

We want to design a fully-connected, single-edge, Core-Edge SAN from ethernet switches to connect 5 servers to 3 storage devices. We will use Cat6e ethernet cable to link the servers, storage devices and ethernet switches and select our switches from a list of possible switch types. We developed a MIP model for core-edge SAN design in AMPL and solved it using the CPLEX solver. For a full discussion of our models and results, see our publications on SAN design.Outcomes

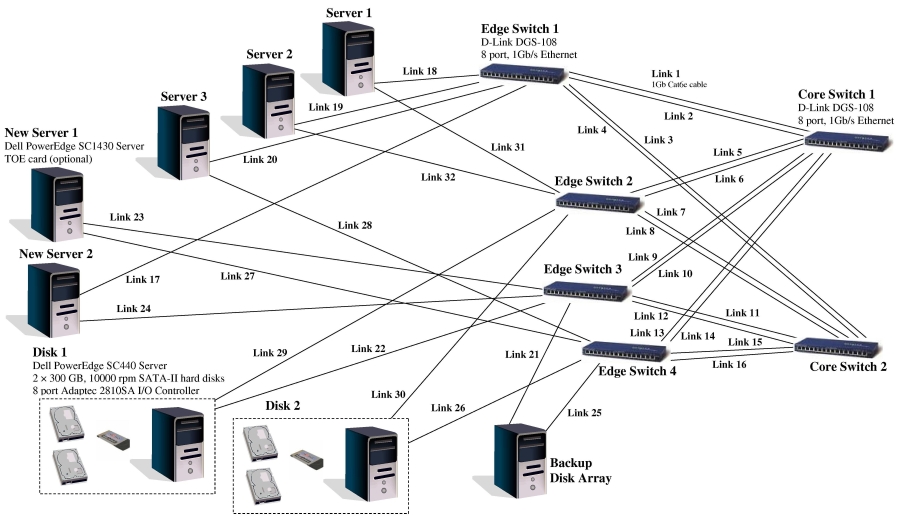

We solved this problem using mixed-integer programming. The resulting configuration of the Core-Edge SAN is shown below: Here is a full size image (approximately A3 size).

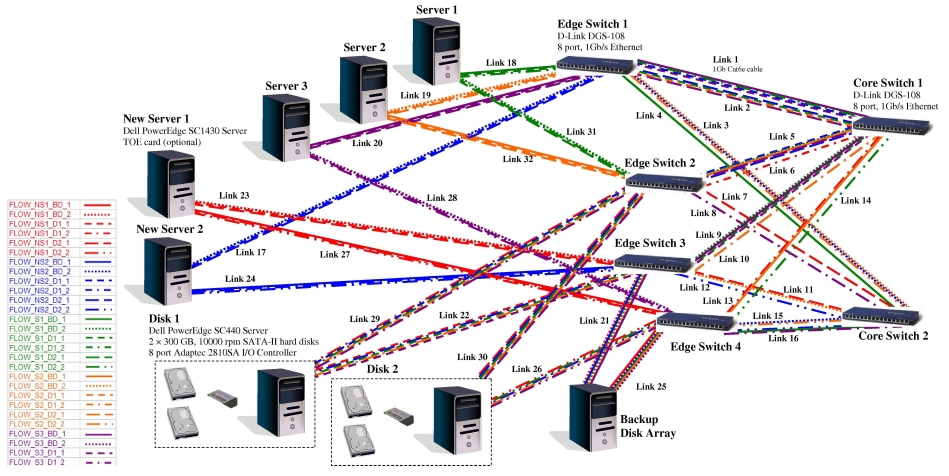

After finding this network, we wanted to see how much traffic it could handle reliably. Using another MIP, we found that the network could handle 200 MB/s of data traffic between all server-storage device pairs with diverse protection reliability (i.e., 2 node disjoint paths to support each flow). The data flow diagram is shown below:

Here is a full size image (approximately A3 size).

After finding this network, we wanted to see how much traffic it could handle reliably. Using another MIP, we found that the network could handle 200 MB/s of data traffic between all server-storage device pairs with diverse protection reliability (i.e., 2 node disjoint paths to support each flow). The data flow diagram is shown below:

Here is a full size image (approximately A3 size).

In 2007 NDSG received a grant from The University of Auckland's Faculty of Engineering CAPEX fund to implement the core-edge SAN we designed in this project. The details of the implementation work can be found in the Implementing a Storage Area Network Prototype project.

Here is a full size image (approximately A3 size).

In 2007 NDSG received a grant from The University of Auckland's Faculty of Engineering CAPEX fund to implement the core-edge SAN we designed in this project. The details of the implementation work can be found in the Implementing a Storage Area Network Prototype project.

Publications

- A Mixed-Integer Approach to Core-Edge Design of Storage Area Networks, C. G. Walker, M. J. O’Sullivan and T. D. Thompson, Computers and Operations Research 34 (10), 2976-3000, 2007

- Core-Edge Design of Storage Area Networks - A Single-Edge Formulation with Problem-Specific Cuts, C. G. Walker, M. J. O’Sullivan and T. D. Thompson, Computers and Operations Research 37 (5), 916-926, 2010

| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

san_configuration.jpg | r1 | manage | 955.3 K | 2010-12-15 - 01:46 | MichaelOSullivan | |

| |

san_flows.jpg | r1 | manage | 1351.1 K | 2010-12-15 - 01:48 | MichaelOSullivan |

Topic revision: r5 - 2011-09-08 - TWikiAdminUser

Ideas, requests, problems regarding TWiki? Send feedback